A Critical Strategic Assessment for Technical Leaders and Security Professionals

By Edward Meyman, FERZ LLC

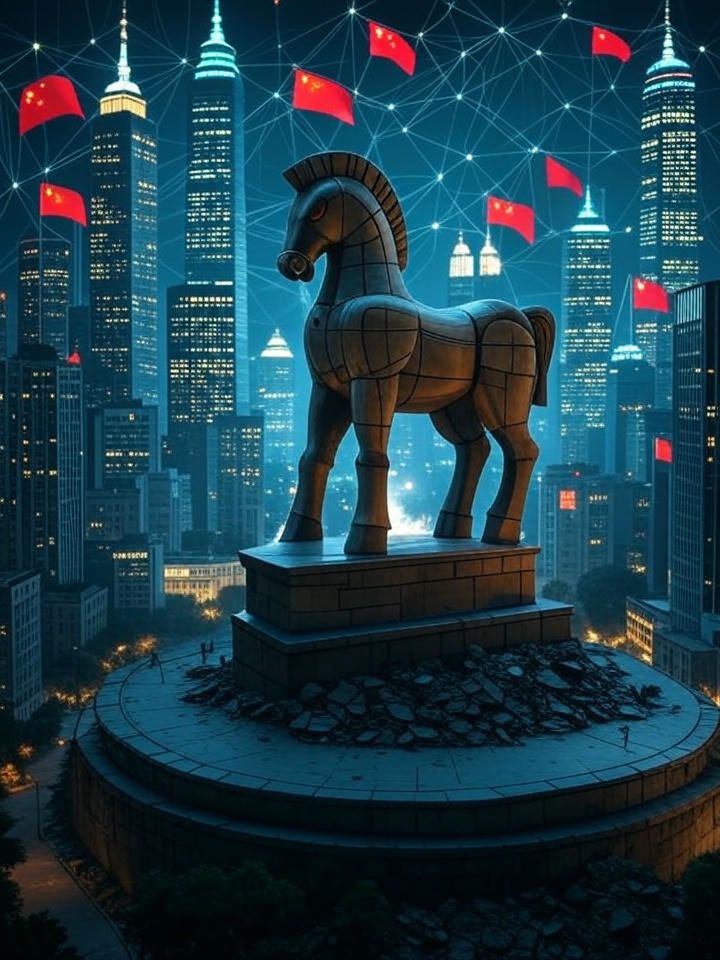

The rapid global adoption of Chinese AI models like DeepSeek represents one of the most significant strategic vulnerabilities facing Western organizations today. While these systems appear to offer cost-effective alternatives to commercial solutions, they introduce sophisticated risks that operate beyond traditional cybersecurity frameworks.

This comprehensive 31-page technical analysis exposes the dangerous myths that have enabled widespread adoption of potentially compromised AI systems and provides practical frameworks for organizations to assess and mitigate these threats while maintaining innovation capabilities.

What You’ll Learn

Critical Threat Mechanisms

- Emission-based intelligence gathering that operates without network connectivity

- Semantic backdoor activation systems triggered by organizational contexts

- Identity-based targeting for high-value defense contractors and tech companies

- Multi-stage activation chains designed to evade detection protocols

Practical Implementation Tools

- Deployable security countermeasure code and frameworks

- Risk-graduated organizational assessment methodologies

- Step-by-step guides for air-gapped deployment solutions

- Input sanitization and behavioral monitoring systems

Strategic Decision Frameworks

- Economic cost modeling that accounts for hidden risks and switching costs

- Risk classification matrices for different application types

- Phased implementation strategies for organizations of all sizes

- Regulatory compliance guidance for evolving AI security requirements

Real-World Context

- Analysis of China’s National Intelligence Law and mandatory cooperation requirements

- Historical precedents from telecommunications and solar panel market penetration

- Case studies demonstrating how technical vulnerabilities enable strategic intelligence gathering

- Academic research foundation supporting documented attack vectors

Who Should Read This

Chief Technology Officers seeking to understand the technical risks of foreign AI adoption while maintaining competitive innovation capabilities

Chief Information Security Officers responsible for evaluating AI security frameworks and implementing practical risk mitigation strategies

Technical Architects designing AI deployment strategies that balance operational efficiency with strategic security requirements

Defense and Critical Infrastructure Organizations requiring comprehensive threat assessment for AI adoption in sensitive applications

Policy Makers and Regulatory Officials developing frameworks for AI governance and national technology security

Why This Analysis Matters Now

The technical community has developed dangerous misconceptions about AI security—believing that open source equals safe, that local deployment eliminates foreign intelligence risks, and that air-gapping provides complete protection. These assumptions ignore sophisticated attack vectors that can be embedded directly into neural network weights during training.

As organizations increasingly integrate AI into critical workflows, the window for implementing effective security measures is rapidly closing. The cost of switching away from dependency on foreign AI systems grows exponentially with integration depth, making early strategic decisions critical for long-term technological independence.

This analysis provides the technical depth and practical tools necessary to make informed decisions about AI adoption—decisions that will shape competitive positioning and technological sovereignty for years to come.